FAILURE IN CHROME

A Wrong Biennale Pavillion

Curators: Tom Milnes and Emile Zile

Artists: Ian Keaveny, Sarah Levinsky & Adam Russell, Kiah Reading, Tom Smith, Joshua Byron, Marc Blazel, Samuel Fouracre, Micheál O’Connell/Mocksim, Naomi Morris, Mette Sterre.

1st Nov 2017 - 31 Jan 2018.

FAILURE IN CHROME showcases the talent from around the world working with digital performativity. Artists’ work deals with research interactions between digital, online spaces and/or their physical materiality within performance, with an approach which creates discourse around error or failure within these manifestations. Each artist will exhibit for one week on DAR’s online residency space with a live-stream performance at the end of each week by the resident artist.

17th Jan - 23rd Jan

Mette Sterre

what do we see in the inside of our eyelids

10th Jan - 16th Jan

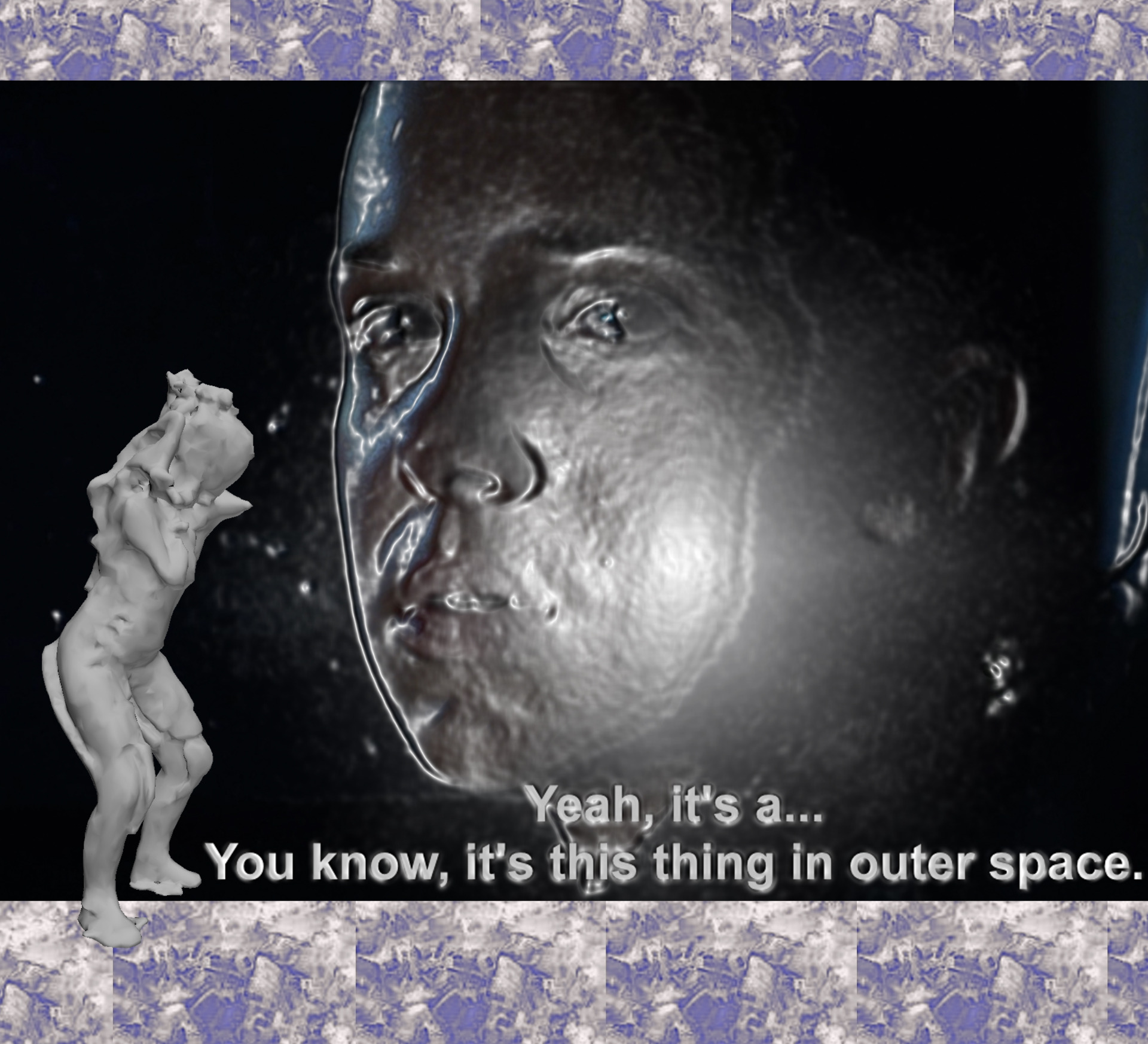

Micheal O'Connell aka Mocksim

Day 07

Streaming live from 8pm (GMT) sample footage below. That's it from me at Mocksim.org.

Day 06

Some more concoctions below from exercises using sat-navs and maps-apps (wrongly).

Other notes:

- Information on speech synthesis for those who are interested: http://www.explainthatstuff.com/how-speech-synthesis-works.htmlmaps-apps (wrongly)

- There is some technical rationale which may explain why 'female' voices are more common: https://www.readspeaker.com/using-female-voices-in-speech-synthesis/

- But maybe there are ideological reasons too, and what is the impact of particular accents being selected? In the UK and Ireland the default voice tends to be a kind of South of England, arguably middle class, accent. I was thinking that Sat-navs often sound like the British Prime Minister, Theresa May.

Day 05

Been on the road today again. Made a Sat-nav compete with a Maps-app. Low on drama this sample from the experiments. Some action at the beginning and after about 1min 40sec...

Day 04

Remembering the Lowtech Manifesto

Intense discussions about how to stream, on the move, with inevitable limitations on bandwith. Thinking of the old art/design adage of 'truth to materials'. A person might submit to what the materials, in this case networked devices and applications, are doing.

Day 03

Experimenting on the buses. Maps goes ape because it cannot control the journey. Luckily the app has company in that the bus, which also identifies as female, talks back.

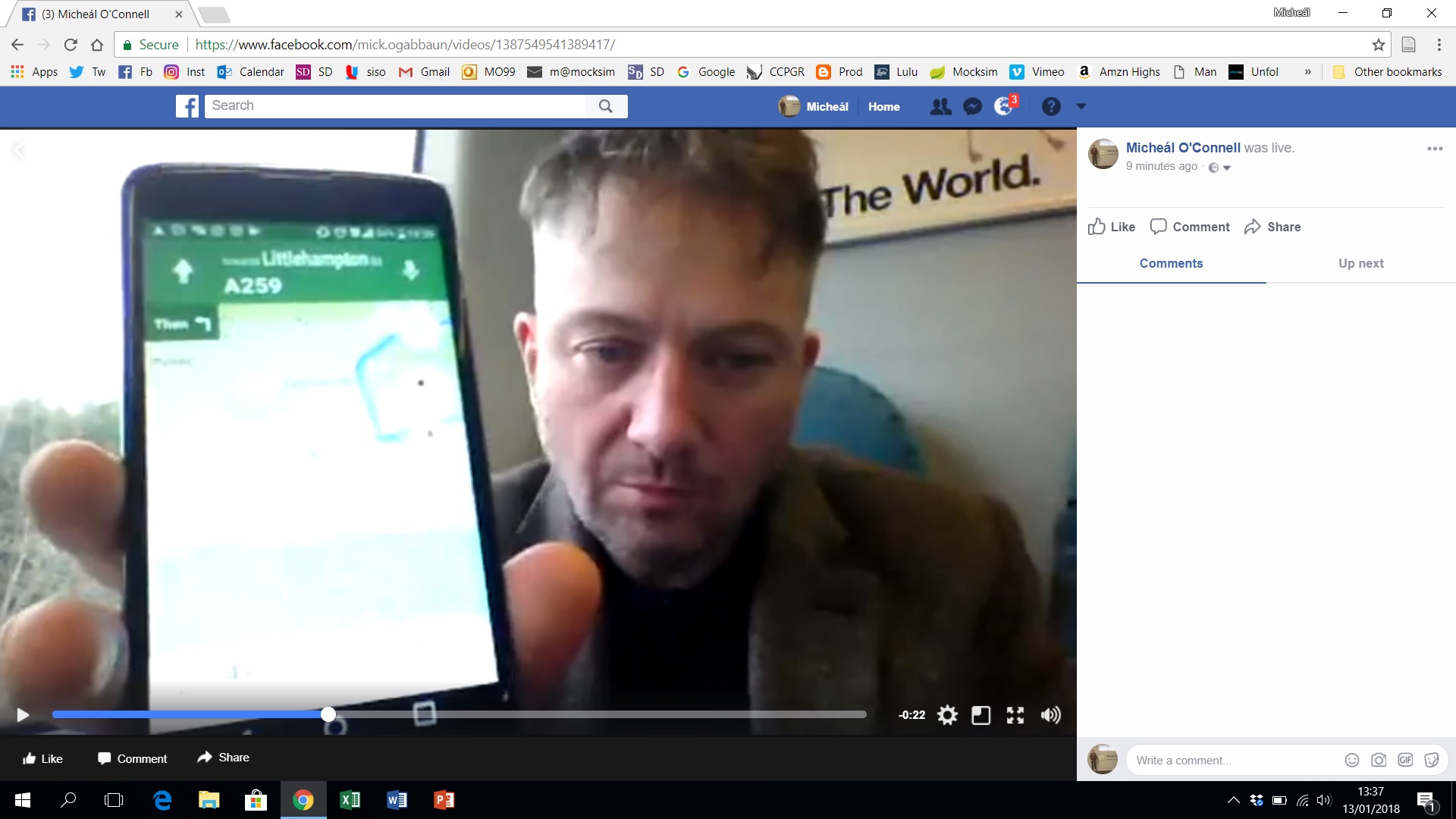

Day 02

Day 2 was frustrating though I took a car out with two passengers - thanks Ruby and Betty - in an attempt to test live streaming onto facebook via my laptop, connected to tethering wifi on my handset. Maps apps were running on this and Betty's handset at the same time. I drove in the opposite direction to the programmed destination (Fabrica Gallery in Brighton, UK). Betty held the two handsets and the laptop, and Ruby made video recordings using two DSLRs. Much glitching was experienced as expected on the visual front but the sound was fine and the signal was lost properly only once. Some of the 150 or so viewers on facebook seemed to like the glitches and sent screenshots and the like. I'm not that interested in the tired old subject of 'glitch art' and am more interested in upsetting the maps apps a little. Not sure where this will lead. Luckily there are 5 days before the live streaming on 16th, which will probably take place from 8pm: I'll decide soon. Technology used today include 1) 3 mobile phone handsets, 2) Car-club booking software, 3 A car, 4) a PC laptop, 5) 2 DSLRs, 6) it'seeze, 7) Facebook, 8) Instagram, 9) Twitter, 10) e-mail, 11), shoes, 12) clothes, 13) roads etc. etc. etc. This is what you call a residency. Some of the screenshots posted by others while I was driving:

DAY 01

Day 1 so far has involved checking whether video recording and the maps app will run at the same time on my cruddy LG (Life's Good) handset. It turns out that is possible which is pleasing and surprising. The maps app instructions were then disobeyed. Experimental recordings were made while walking around on foot. Generally, breaking the mundane everyday algorithmic and bureaucratic codes is of interest. (I see bureaucratic systems and algorithms as similar.) Some video footage from a drive on Tuesday – in a car club car - captured on a DSLR, with the maps app on the mobile device talking, were studied. My 13-year old daughter, whom I was collecting for school, was in the car. She helped a bit (in the compulsory slightly-resentful way that teenagers do with parents). A low-tech approach is something being advocated too. But not necessarily low-tech, more any-tech or whatever-tech i.e. accessing tools and technologies which happen to be at hand. The world is full of this stuff. We live in a technologically rich landscape. People tend to take a lot for granted. I don’t. I’m uploading some samples from today now. See above.

Some background work. A starting point. I had been incorporating sat-nav and maps app output. See below.

3rd Jan - 9th Jan

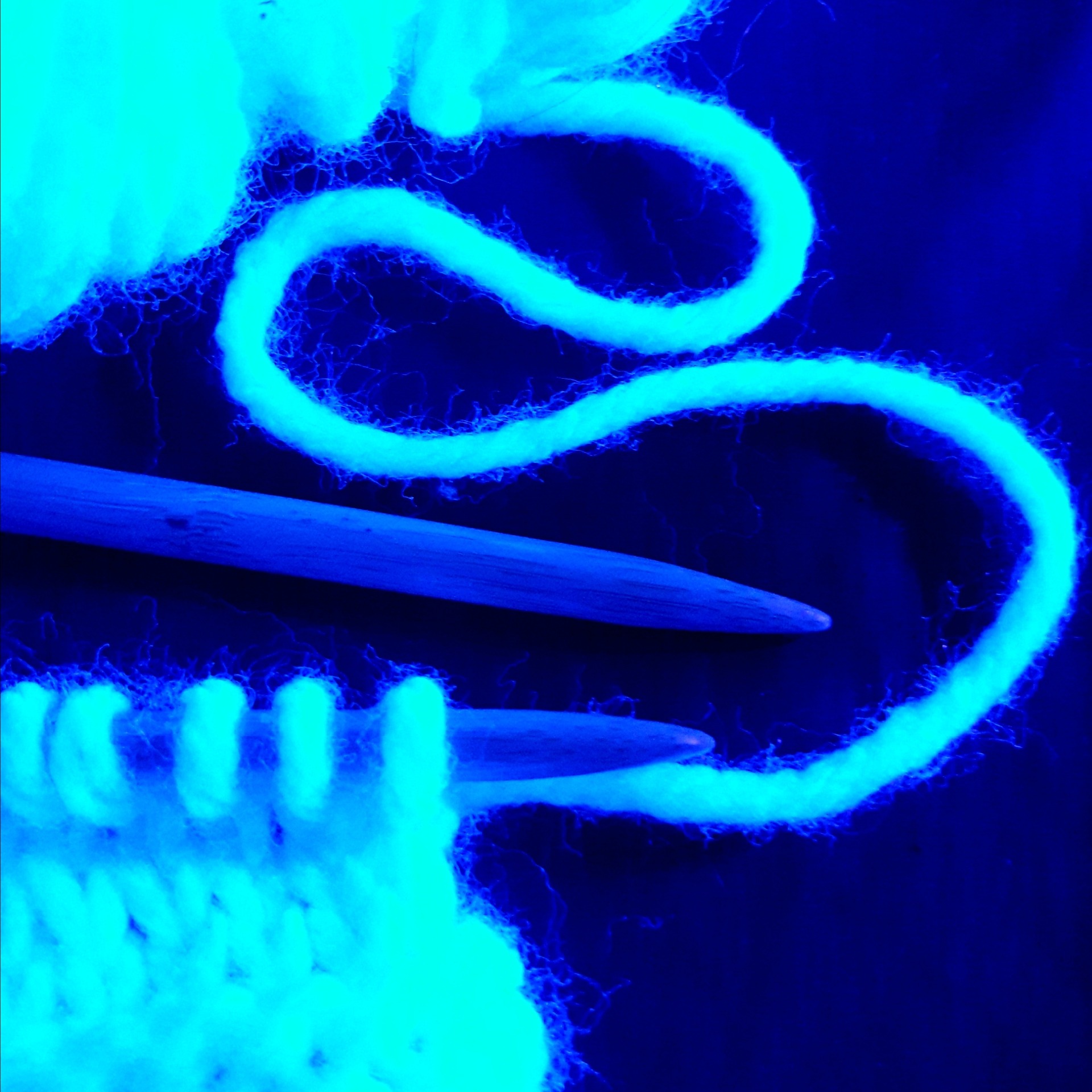

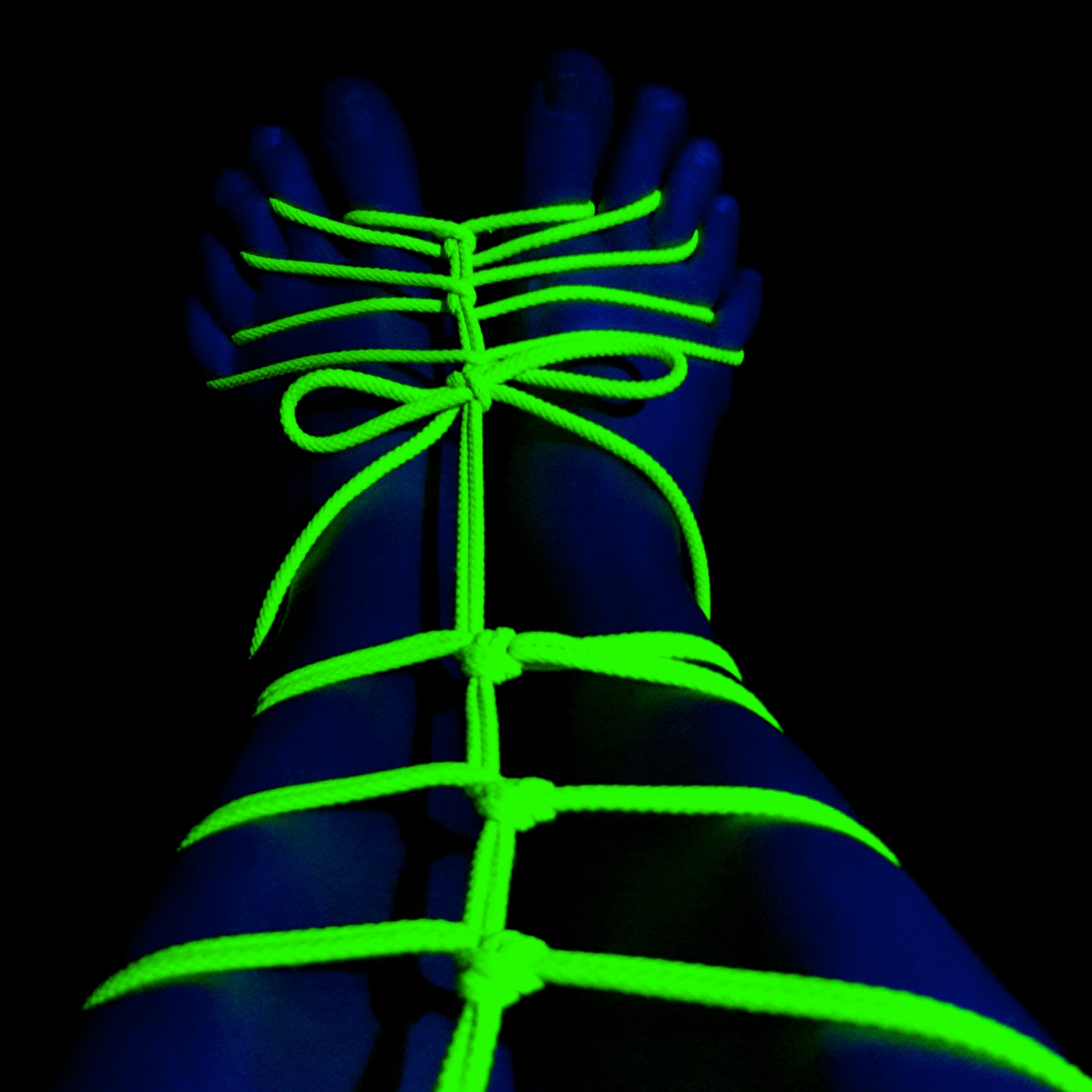

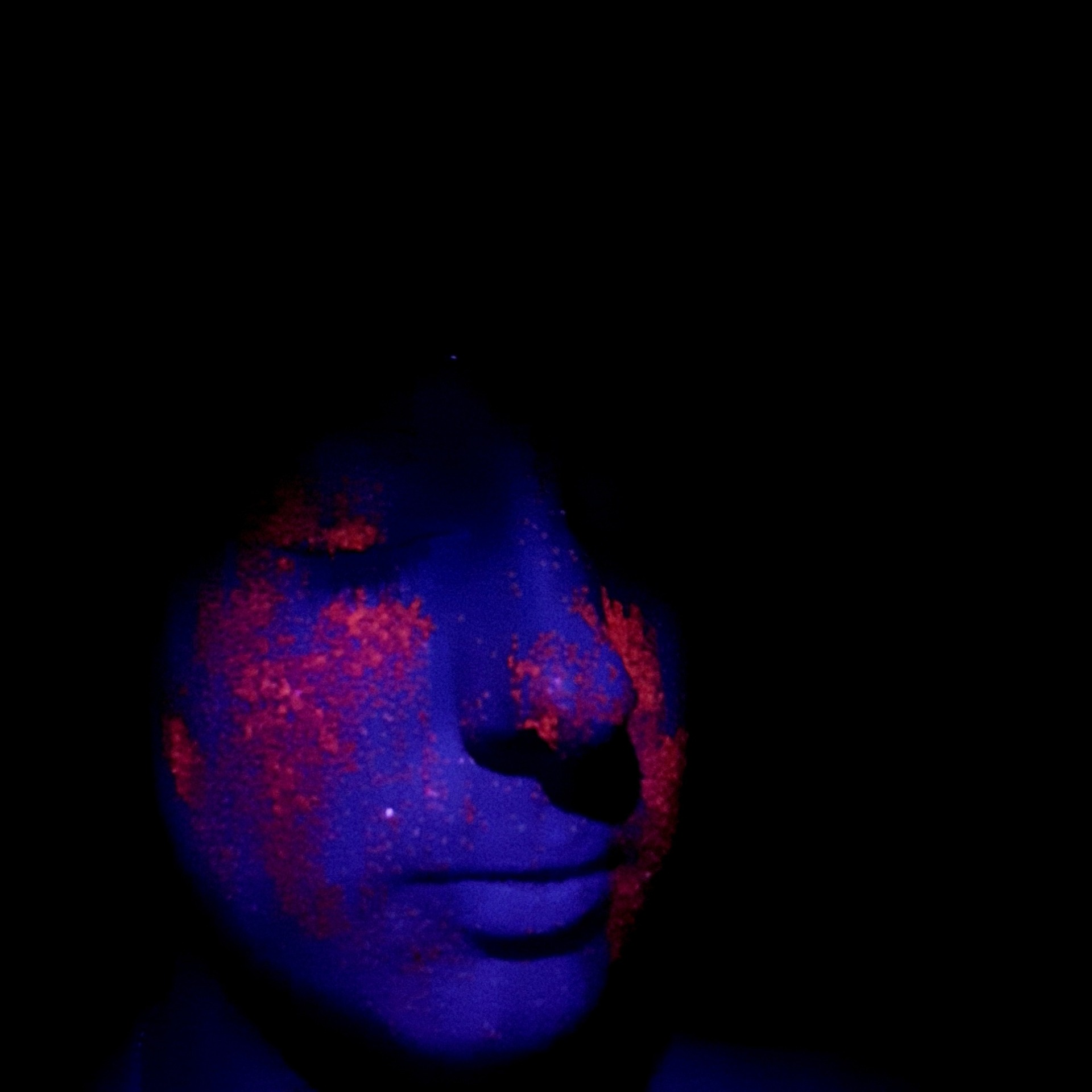

Naomi Morris

Concealed/Revealed/ExposedIII

Music by James Telford

Lighting by Hugh Pryor

naomiemorris.wixsite.com/portfolio

I'm an autistic artist speaking in moving digital pictures.

Day White

Day Blue

Day Yellow

Day Orange

Day Pink

13th Dec - 19th Dec

Samuel Fouracre

Transparent Things

6th Dec - 12th Dec

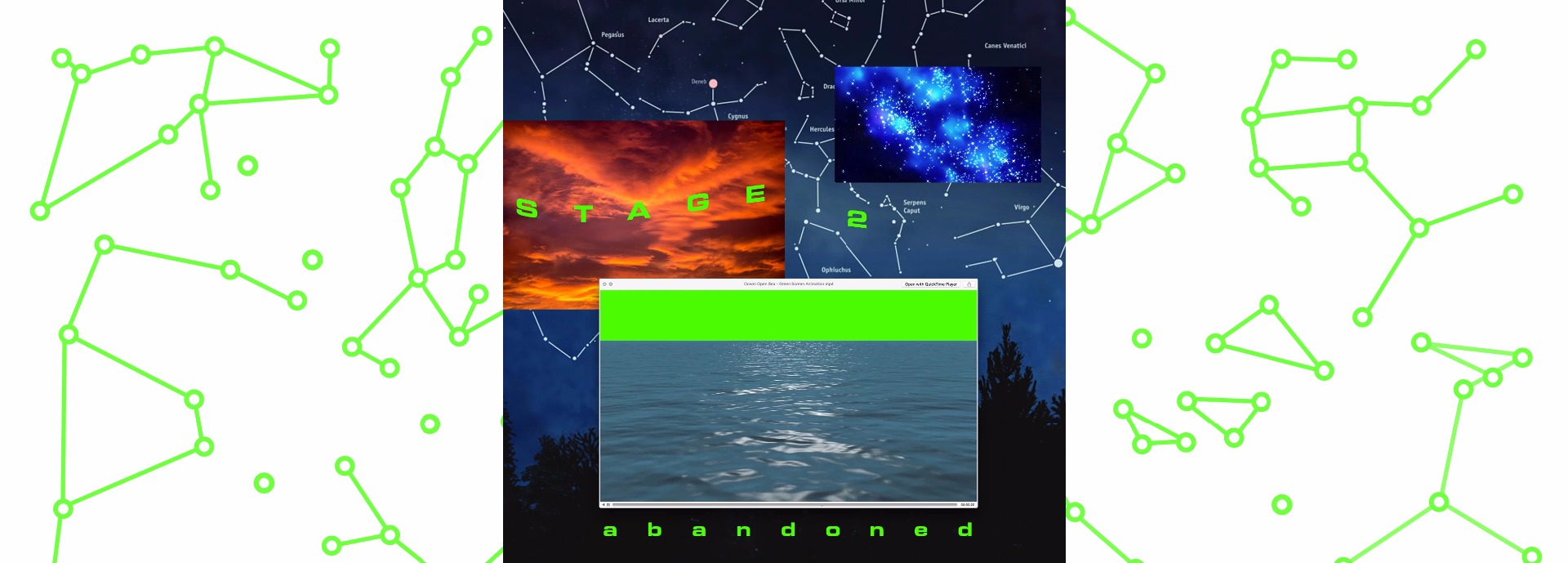

Marc Blazel

Streamed Communities

Autonomous online communities are emptying faster than any physical meeting place, leaving ghost towns in their wake. By exploring these abandoned communities you discover what the web once was, a free state of creativity and expression that is quickly fading from view. My work aims to reclaim these digital neighbourhoods and build cities out of their rubble. Thinking of space not just as a physical manifestation but also a digital concept. Finding home in a digital environment is of great comfort in a world that is increasingly intolerant of difference and subculture. But is online space as valid as IRL?

Looking at livestreaming as a community hub I will be exploring my own past and present to create new spaces using existing software and imagery.

Marc Blazel is a multidisciplinary artist working in video, image making and web theory. His current practice involves exploring and creating online communities that push the boundaries of digital expression. You can find his work @ www.marcblazel.com.

EXPLORING THE WILDLANDS - is there anything left?/Titans used to rule this place - read me, can you read me?

All my lives - Coming home, nothing is left/they took it. All I have is my memories now. DOWNLOAD CONTENT.

29th Nov - 5th Dec

Joshua Byron

decaf coffee & art bois

The space between emotions, vulnerability, and gender are some of the most tenuous and torrid we ever face. What does it mean to be in love? To date? To eat peppermint bark with a lover over coffee only to be ghosted? To be downturned by a cute guy on Tinder and end up listening to Joni Mitchell alone? There's a palpable feeling to be a single person in a city. The couples on the subway, the endless first dates in bars, the brunches with your girls...

Internet dating is not that different from IRL dating, except that people often feel like they can say more nasty shit. Nasty in a multiplicity of ways. Dating as nonbinary/trans femme has always been a navigation of vulnerability, walls, being a Virgo ice queen, and being a hopeless trans optimist at times. I've encountered endless types of bois from the softboi, the fuckboi, the artboi, the sadboi, the pretentiousboi, the hotboi, the gentleboi, the ghostboi to the ultimate softboi: the chasteboi. All have their unique strengths and unique, endless weaknesses.

Joshua Byron is a trans nonbinary video artist and writer based out of Brooklyn. They enjoy dumplings, espresso, Haruki Murakami novels, and Oprah. Their latest work is Idle Cosmopolitan, a webseries about a trans relationship writer who meets a gay ghost. Find them @lordjoshuabyron and at joshuabyron.com

22nd Nov - 28th Nov

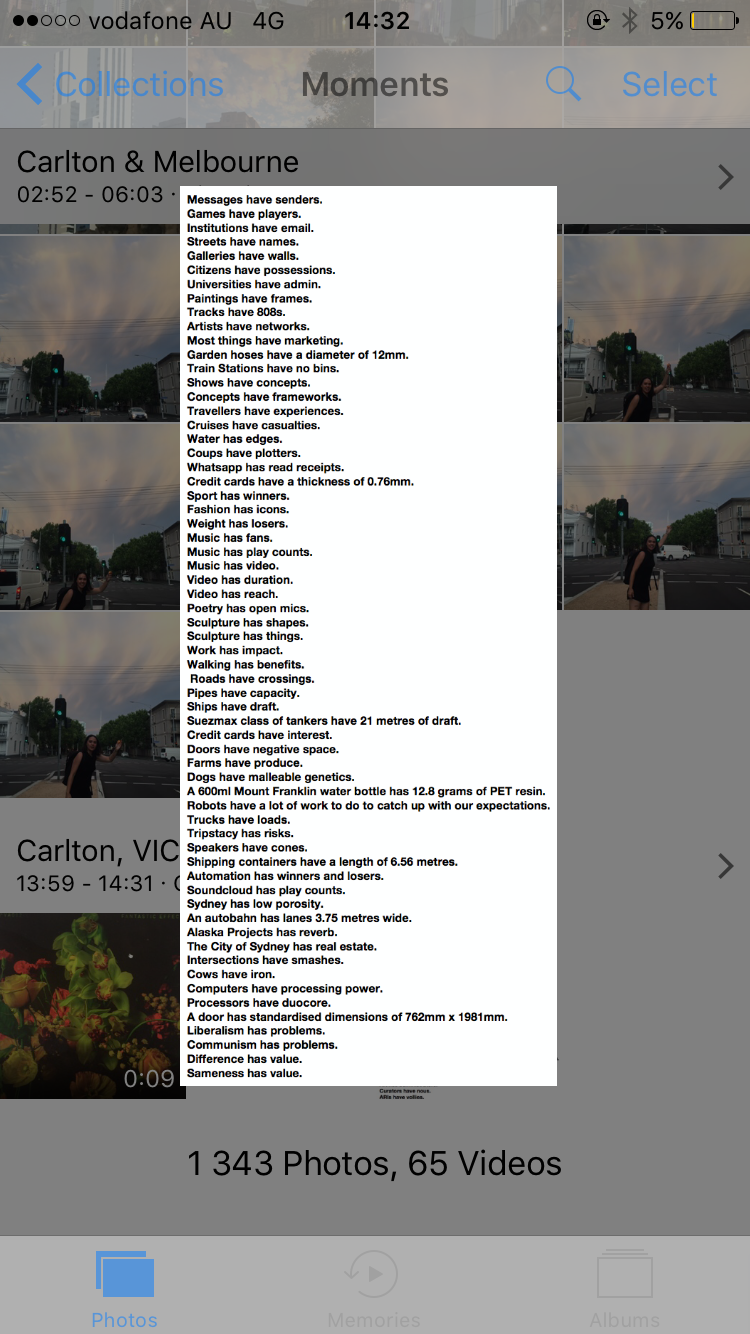

Tom Smith

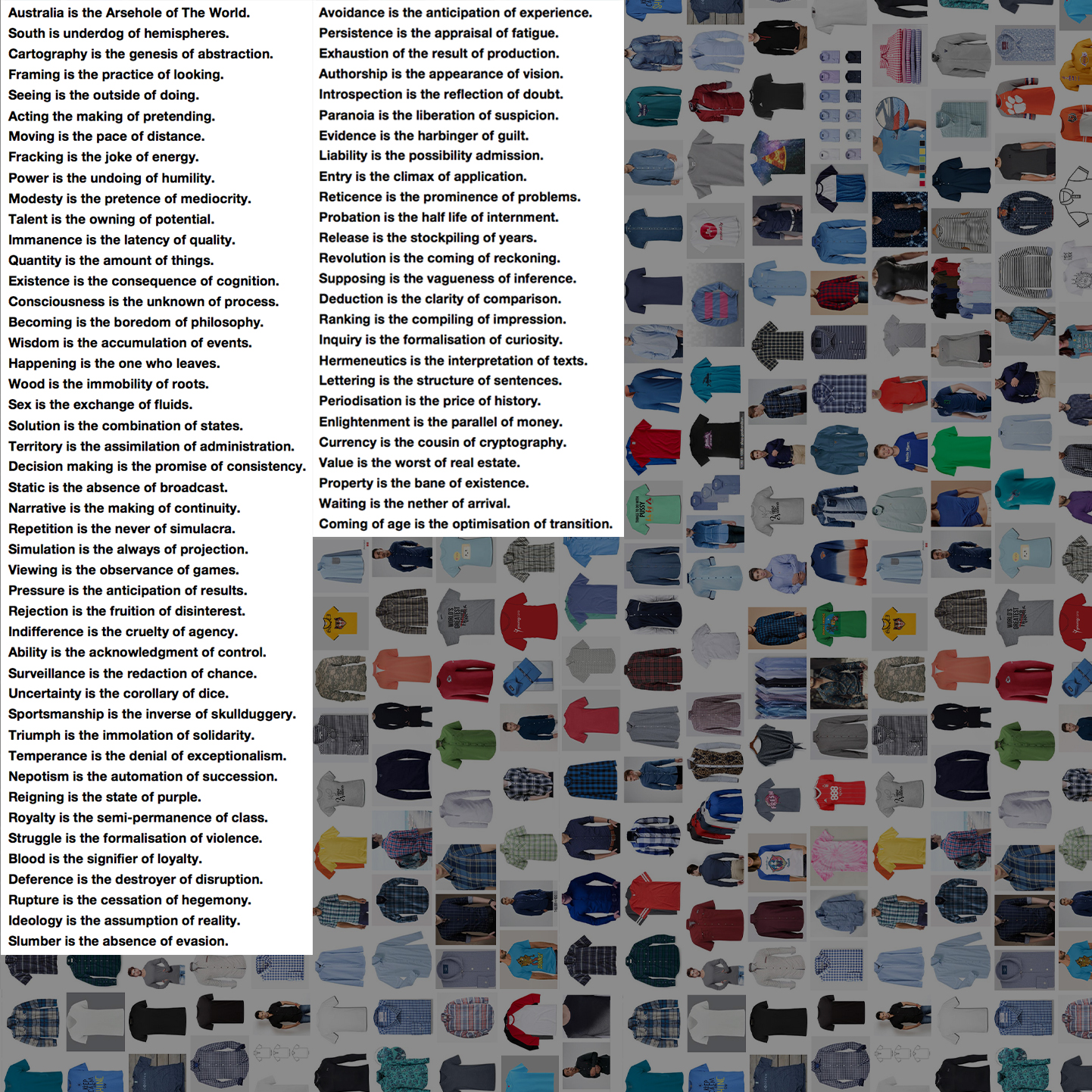

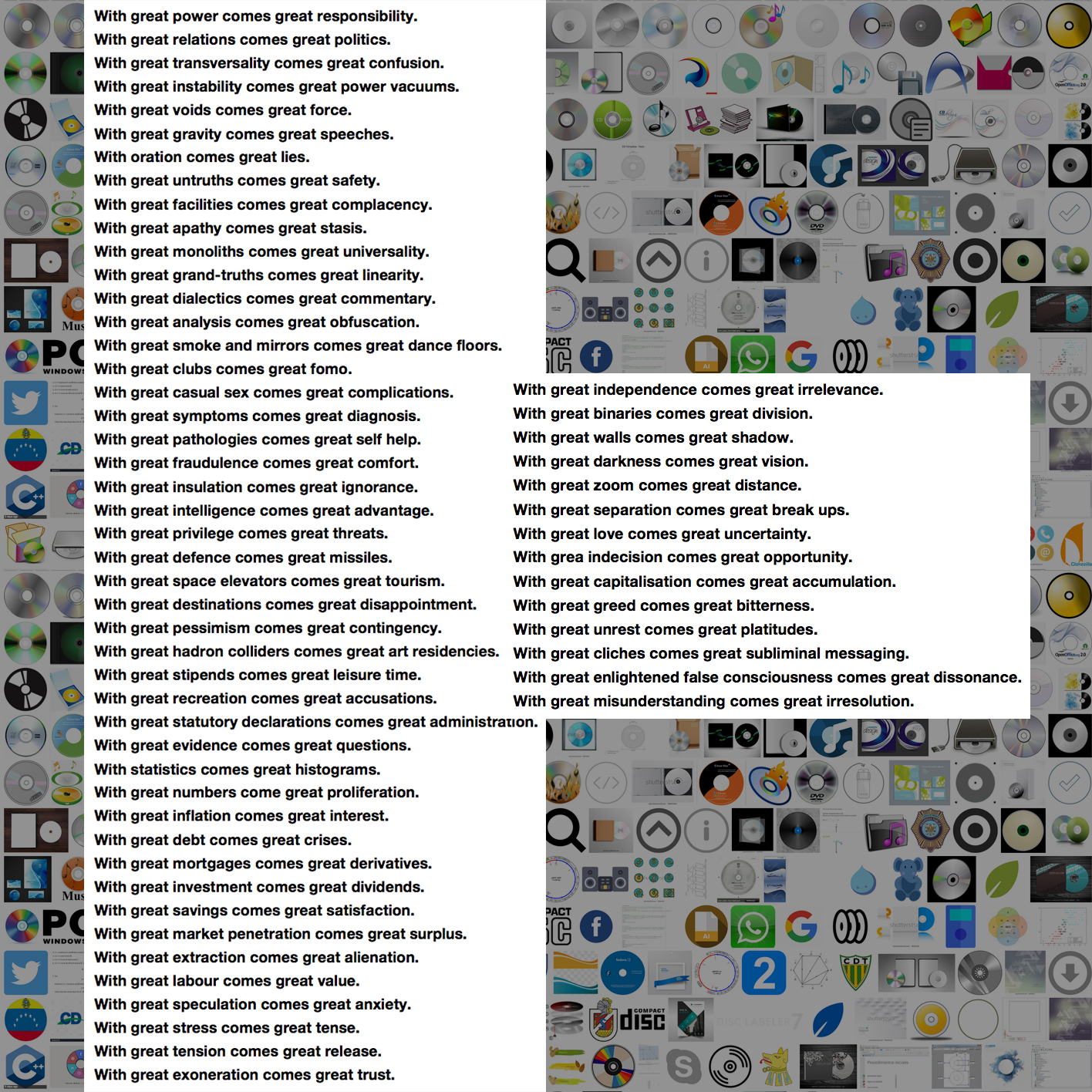

Snowclones

I have been thinking about linguistic structures, known since 2004 as ‘snowclones’.[I] Snowclones are phrases in which certain words can be altered and substituted, while retaining the phrases’ overall structure. They are malleable clichés in which the relation between entities is fixed, but the entities themselves are interchangeable. For instance Arthur C Clarke’s maxim “Any sufficiently advanced technology is indistinguishable from magic” – could be thought of as a snowclone—‘technology’ and ‘magic’ can substituted with other entities. It could be rewritten as “Any sufficiently advanced ____ is indistinguishable from ____ ”More examples can be found here - https://snowclones.org/

The snowclone exemplifies the structure of an example; for Georgio Agamben, an ‘example’ is “Neither particular nor universal”.[ii] Examples are neither singular, nor generic—and yet, like Snowclones, they are both these things. This week I will try to inhabit an eerie connection between the universal and the particular, by identifying snowclone-like structures in different mediums. I will try to represent the difference that inevitably leaks into iterative processes, and to play on the contradictory nature of various forms of standardisation.

[ii] Agamben, G. (1993). The coming community (Vol. 1). U of Minnesota Press.

* Any sufficiently advanced technology is indistinguishable from magic

Any sufficiently advanced practice is indistinguishable from doing things

Any sufficiently advanced software is indistinguishable from a preset

Any sufficiently advanced critique is indistinguishable from thinking

Any sufficiently advanced pastime is indistinguishable from leisure

Any sufficiently advanced artwork is indistinguishable from prestige

Any sufficiently advanced argument is indistinguishable from negation

Any sufficiently advanced meat is indistinguishable from an animal

Any sufficiently advanced weaponry is indistinguishable from violence

Any sufficiently advanced film is indistinguishable from cinema

Any sufficiently advanced geopolitical strategy is indistinguishable from self interest

Any sufficiently advanced corruption is indistinguishable from opportunism

Any sufficiently advanced platform is indistinguishable from a web-store

Any sufficiently advanced wav file is indistinguishable from music

Any sufficiently advanced jpg is indistinguishable from a tiff

Any sufficiently advanced pork product is indistinguishable from a pig

Any sufficiently advanced piano is indistinguishable from the bourgeoisie

Any sufficiently advanced tactic is indistinguishable from a repeatable plan

Any sufficiently advanced philosophy is indistinguishable from its thesis

Any sufficiently advanced dialectic is indistinguishable from the arc of history

Any sufficiently advanced book is indistinguishable from a long article

Any sufficiently advanced container ship is indistinguishable from its cargo

Any sufficiently advanced pandemic is indistinguishable from population control

Any sufficiently advanced pathogen is indistinguishable from its vector

Any sufficiently advanced relationship is indistinguishable from its narrative

Any sufficiently advanced ego is indistinguishable from a will to power

Any sufficiently advanced border region is indistinguishable from a threshold

Any sufficiently advanced portal is indistinguishable from a door

Any sufficiently advanced poem is indistinguishable from a sentence

Any sufficiently advanced sport is indistinguishable from a contest

Any sufficiently advanced lie is indistinguishable from swift boating

Any sufficiently advanced strategy is indistinguishable from a plan

Any sufficiently advanced synergy is indistinguishable from a connection

Any sufficiently advanced campaign is indistinguishable from coercion

Any sufficiently advanced political party is indistinguishable from a gang

Any sufficiently advanced musician is indistinguishable from an entertainer

Any sufficiently advanced tradesman is indistinguishable from a sculptor

Any sufficiently advanced human faeces is indistinguishable from canine faeces

Any sufficiently advanced error is indistinguishable from oversight

Any sufficiently advanced red herring is indistinguishable from the truth

Any sufficiently advanced truth is indistinguishable from a truism

Any sufficiently advanced cliche is indistinguishable from a classic

Any sufficiently advanced exhibition is indistinguishable from a selection

Any sufficiently advanced organism is indistinguishable from a model

Any sufficiently advanced recipe is indistinguishable from a dictum

Any sufficiently advanced dictum is indistinguishable from advice

Any sufficiently advanced advice is indistinguishable from instruction

Any sufficiently advanced instruction is indistinguishable from a proverb

Any sufficiently advanced proverb is indistinguishable from a commandment

Any sufficiently advanced commandment is indistinguishable from a law

Any sufficiently advanced law is indistinguishable from a pillar

Any sufficiently advanced pillar is indistinguishable from a foundation

Any sufficiently advanced foundation is indistinguishable from a charity

Any sufficiently advanced charity is indistinguishable from tax avoidance

Any sufficiently advanced tax avoidance is indistinguishable from liberalism

Any sufficiently advanced liberalism is indistinguishable from anarchy

Topoi Koinoi represents infrastructure and the generic, the most basic elements common to life in consumer economies—this focus on relational structures prompted me to think about snowclones. This work has a written component that scrolls bottom left.

The poem is a kind of expanded snow clone in the sense that it is designed to cumulatively broach a generic relational structure that frames all perceivable entities and qualities within others. Each entity is nested within another entity that is both its result and its genesis; an artist cannot exist without a network, and there is no artistic network without artists, and so on. In the same way that all these chains of association appear to merely describe themselves, I sometimes feel that artistic production can be thought of as a sort of tautology that always redescribes the new as it is produced over and over, and so in a sense I think of the new as being always inaugurated. In other words the new itself is generic.

*X is the Y of Z

*With great power comes great responsibility

*Don't hate the player hate the game.

Snowclones - Live Performance 28/11/2017

15th Nov - 21st Nov

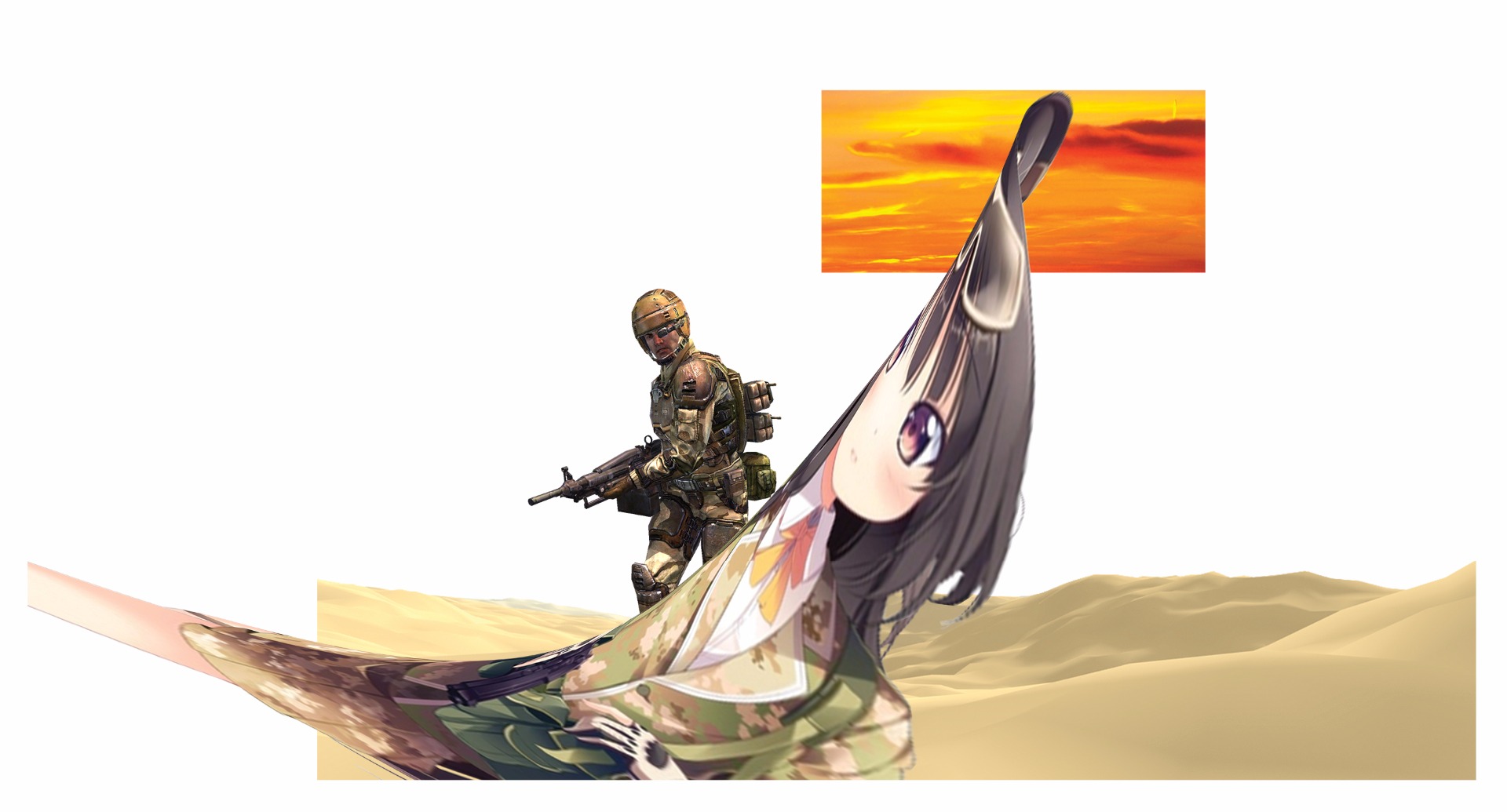

Kiah Reading

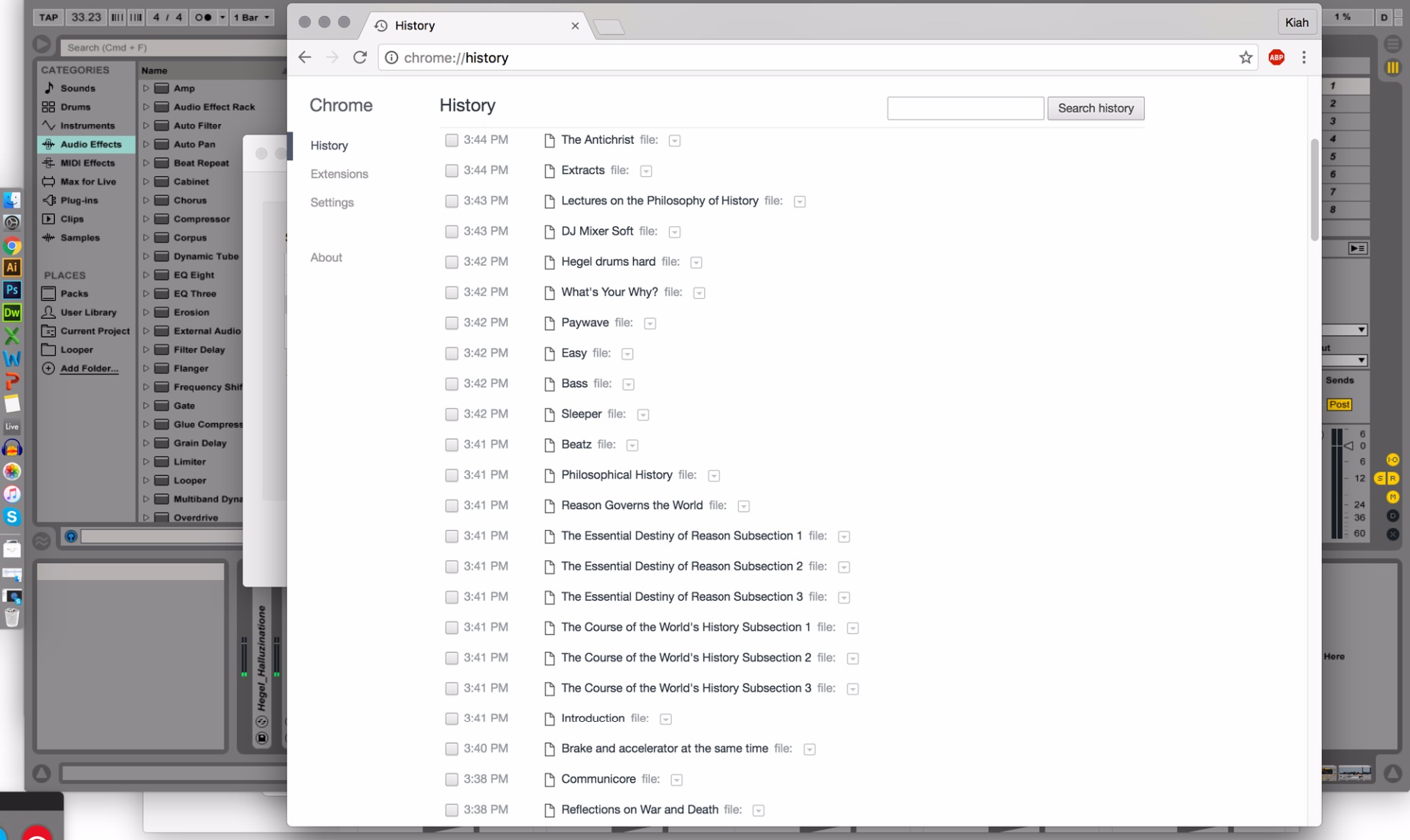

Pure reason & bass

When first talking about this project I had recently listened to Keller Easterling quote someone to say, truth is at a huge disadvantage because it’s only got the one story to tell. Stupidity is at a huge advantage because it can take on all the guises of truth and change its story constantly.

Pure reason and bass is audio and text fragmented and reduced to individual words, lines, ideas and pieced back together in a live, random, non-linear and never ending soliloquy, an infinite slippage based on the ambiguous side of language (as excess).

These websites, which can be performed by all, become exercises in a reopening of the indefinite, the act of exceeding established meanings and providing a moment for philosophical verses and soundFX to enter a zone where they lose their extrinsic references and coordinates and re-emerge confounded with new confusions.

Questions responded to by the wrong answer, new versions of old ideas, understanding more or less.

Browser history quickly becomes some kind of tabular, notations of performed sites and patterns.

Over the week I will continue to experiment with ways to code experiences that may mirror our online gestures but generate very different results.

Above and below are experiments in performative browsing where scroll events trigger electronic samples.

Dune pt. 1 for your listening +pleasure+ through this link.

Excerpt from performance below.

8th Nov - 14th Nov

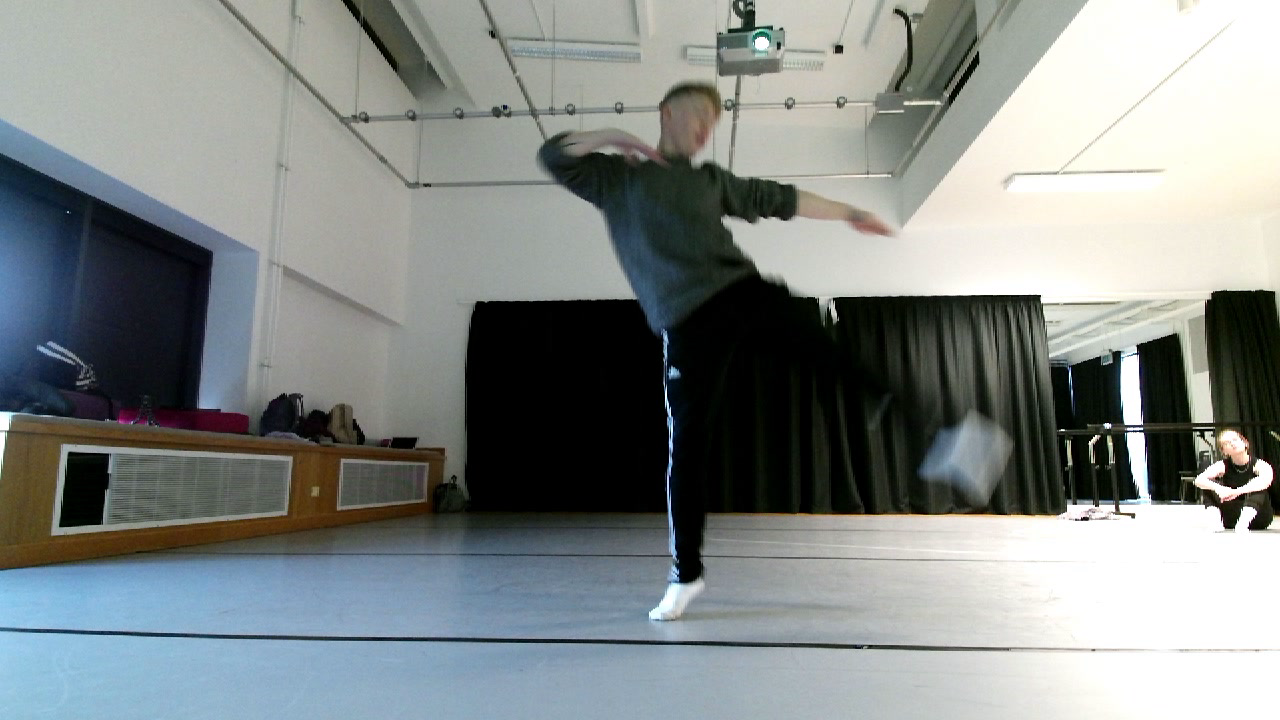

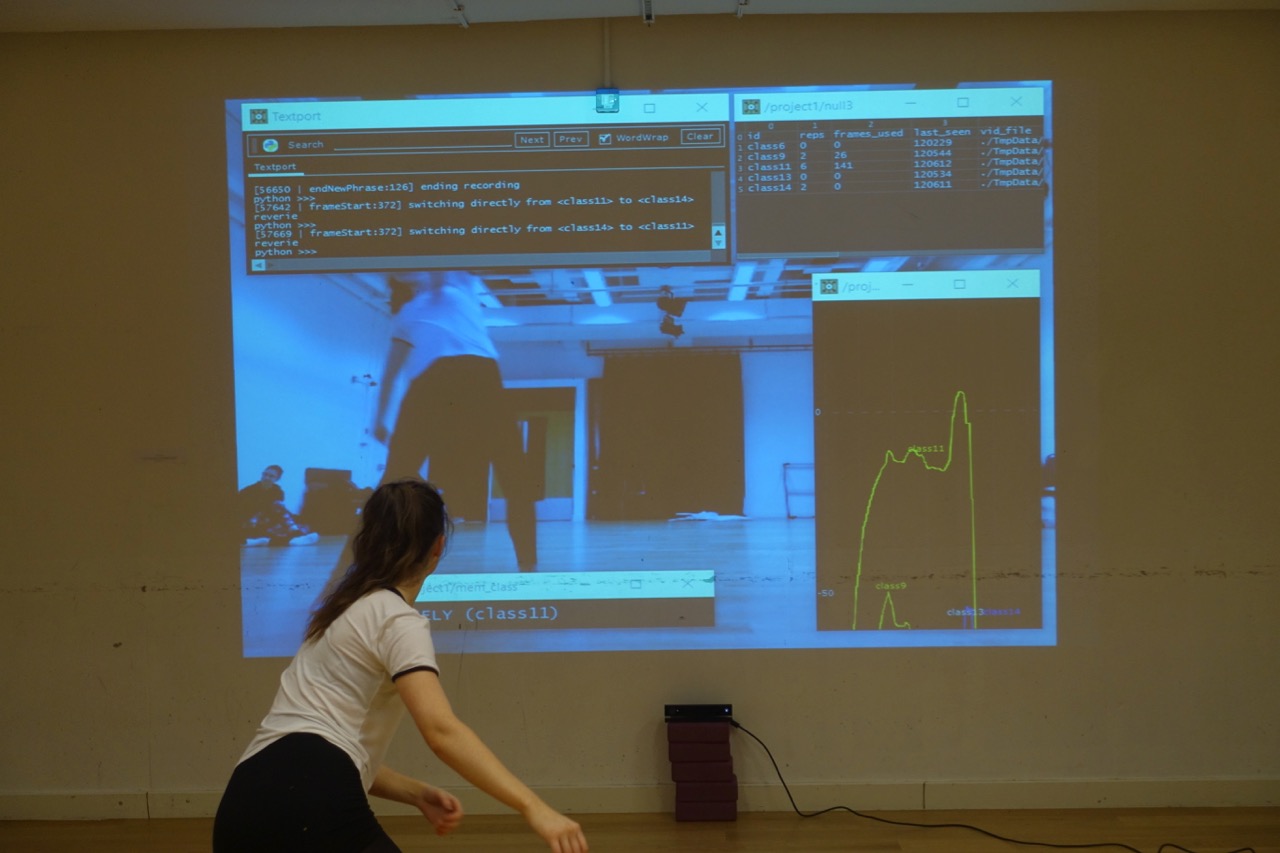

Sarah Levinsky & Adam Russell - Tools that Propel

TONIGHT @8pm Tues 14th Nov // Youtube live stream below...

Day 5 //

(Adam) It seems very fitting that as I was writing this update about trying to remember all the changes made in the last couple of days, I accidentally deleted my draft post with no backup, and had to remember what I wrote about trying to remember things. In fact this is a crude example of my core interest in Tools that Propel. Sedimentary layering of action upon memory becoming memory driving action becoming memory, a recursive folding back-and-forth over time, supported by some kind of inscription or mark making. Does it matter that we cannot remember what we just said, or wrote, or what movement we just performed? A tool can prompt us by playing back recordings of our past actions, but these recordings can never really encompass 'what we were doing' in the past. However this is not a problem, since what really matters is for the playing back of recordings to become a part of 'what we are doing' in the present. I was reminded today of the following quote:

"This table bears traces of my past life, for I have carved my initials on it and spilt ink on it. But these traces in themselves do not refer to the past: they are present" M. Merleau-Ponty (2002 / 1962) Phenomenology of Perception p.479

Day 4 //

Experimenting with various different video outputs/aesthetic choices. Looking at the impact on choreographic decision making and the performance space.

Day 3//

Thinking about group improvisation with Tools that Propel. How to relate to it when there are other bodies in the space and it is not directly tracking you. What is the materiality of the sensor/camera itself? What is the importance of interacting with the system as a material body rather than just a reflective mirror/projection/distortion of your live movement? Keir mentions he thinks that there are three different performers within the improvisation.... the 'active performer' who knows that they are being tracked and is very deliberately front facing... the 'subactive performer' who is dancing with the active performer and trying to become the one in control, the one being tracked... the 'passive performer' who knows that they are in the dance/composition but not being tracked, who is interested in creating (incidental) presence in the memories. What is the sensor or tools that propel judging as 'important' and what becomes interesting because it is captured even though it wasn't focussed upon...? How does this infect/affect the dancer? There are lots of conversations about what the system reveals to them and how that is isn't what they thought they wanted to focus on in their movement but that there is information in it for them to use...

We also started to explore how Tools that Propel might forget memories not simply based on being the 'oldest' memory (ie. forgetting number 1 when number 21 is made and so on) but on the basis of it being the most used. There is a question over whether it should be based on the number of times that memory has been played or the time spent in the memory.

Day 2 //

Today we were joined by two new dancers (Keir Clyne and Katherine Sweet) as well as most of those from yesterday. It has been so interesting working with the dancers with Tools that Propel. The system has become a choreographic collaborator and with every time the dancers improvise with it we learn more about its potential. Yesterday there were interesting discoveries about what was happening for each of them on their first encounter with it, from some of them wanting to cheat the system, creating new movement to try to ensure that it didn’t recognise them, breaking their own natural movement pathways by exploring new trajectories, and others talking about retracing their steps, getting lost in a maze and moving through the data. As they learnt to play the system or interact with Tools that Propel as a collaborator or dance partner they have become more playful and more sensitive to the potential of their exchange with the ‘decisions’ made by the system. They were developing duets with it, each with a different motivation or task – for example to try to focus on exploring an emotional expression from the encounter, to focus on the incidental or the chance element – what the system deems is an important memory to play back – or to make decisions about how much to engage or reject its offerings/decisions/memories.

Today we explored the idea of feeding it with a choreographic phrase that each dancer had already developed. This felt very different – like playing amongst saturated memories – and suggested the potential of it to make their choreographic decision-making more complex, impacting on the ways they thought about the material, its dynamics, its directions, where it took place in space. They each seemed to have a different relationship with the system, and sometimes that seemed to be formed by a number of variables, for example how much it seemed to be struggling with the tracking of their movement (some movement seemingly being more easily aligned by it), or how closely it tracked it, or the fact that it seemed sometimes to ignore or not respond to a particular type of movement, as well as the motivations of each dancer towards it. There were bugs in the system today too – but this is an enquiry which accepts them too and just allows that to be part of what produces the new movement. Sadly, due to a bug we lost most of the session footage from this task.

(the video above shows side-by-side comparison of the input and output video streams; our movers could only see the right-hand image)

Day 1 //

We spent much of today in the studio introducing Tools that Propel to five dance students who will be assisting our residency over the coming week: Rebecca Moss, Brandon Holloway, Holly Jones, Maria Evans and Yi Xuan Kwek

We worked on solo and group improvisations, exploring different ways of working with the system. We began to prototype a multi-body tracking setup but this is not quite ready to show yet. By the end of the afternoon we switched back to single-body tracking and were encouraging our movers to 'compete for focus' by moving up close to the Kinect sensor and getting in each other's way. This created an interesting in-out motion which we hadn't seen so much previously, and more chaotic entanglements of multiple bodies in shot. It was often unclear just who was currently driving the system.

For some months we have been working together to develop an interactive digital environment called Tools that Propel.

Tools that Propel is an interactive video installation or tool that invites participants to evade stable classification of their movements as they improvise with it. To reveal their own live reflection, the participant must present the system with motion and gestures that are not recognised. If it recognises and has tracked their gestures they find themselves engaging with similar footage from both their own recent past as well as the traces of movement made by other people who have interacted with the system before them. Participants can try to bring back these recordings, creating an onscreen choreography from present and past movement, personal and collective memories.

1st Nov - 7th Nov

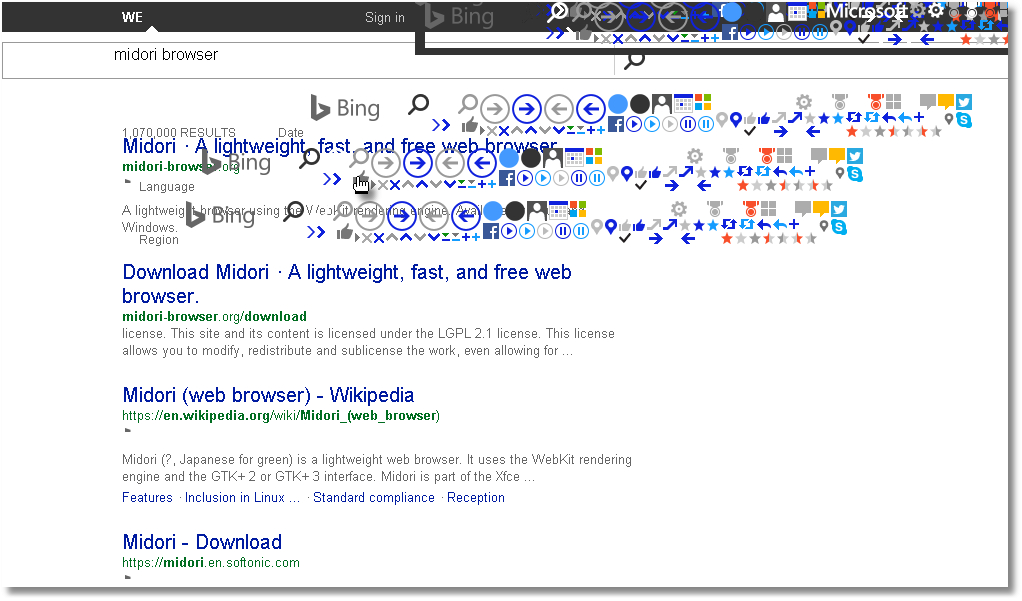

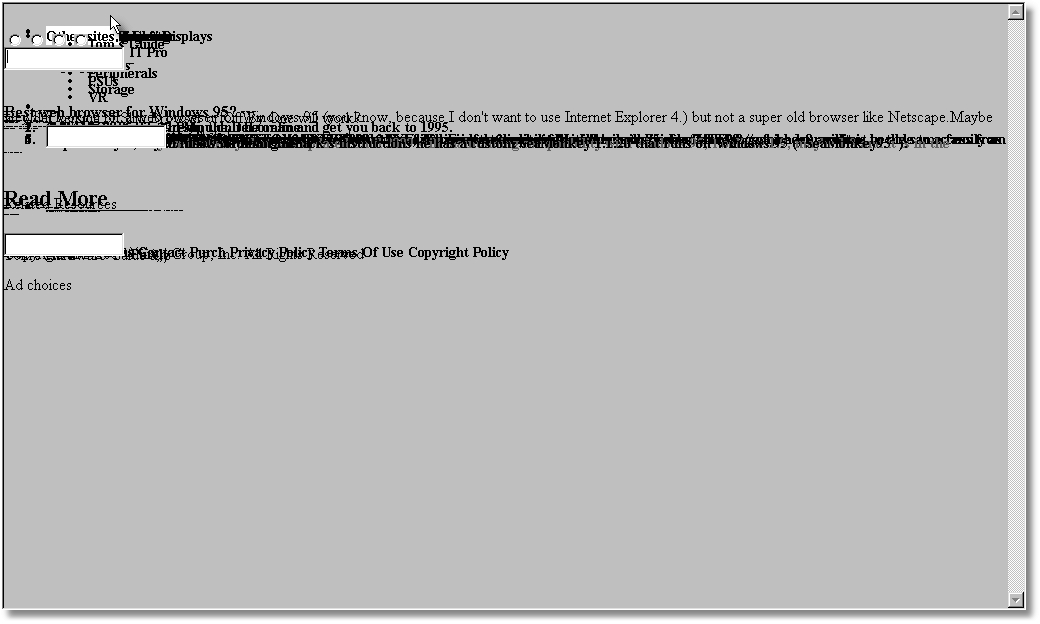

Ian Keaveny - The digital past is a foreign language

My work is based on forcing software and hardware to the point of failure, through hex editing ( changing hex values in a video or audio file ), Sonification ( opening video or image in an audio editor) misinterpretation, ( similar to Sonification but interpreting say a text file as sound ) and the exploitation of hardware faults found in older computers when mismatched with more modern operating systems what is known as Glitch art . The idea for my residency started with a simple question what would the Internet look like to Windows 95 , how would it read it , given how much the Internet has changed visually and technically since then ) and what kind of work could I make with the programs of that era ? If the digital past is a foreign language could I learn to speak it again – and more importantly where would I like to go today ?

The installation process sometimes feels like shamanism, coaxing old hardware back into life, revisiting the digital past as an archeological process involving failure , burnt out psu's and case cut fingers . Video is of installation process of Win95 recorded using webcam then cut into images , each image converted to ppm format , hex edited then reassembled using ffmpeg in Linuxmint - the video is then datamoshed using a ruby script and finally rescued using Flowblade .

Having installed it what will I do with it and what does my desktop sound like ?

I found an obscure program that was designed for blind people to use as a kind of radar , misusing the program I explored the desktop and found I could create feedback loops and disintegrating icons and text like a continuous asemic dialog .

Internet explorer 3 vs the web , browser error in rendering text .

What am I seeing how do I fix this , do I want to fix this ? ( browser rendering error - internet explorer 3)

Today I was lost in the screensaver maze

So I looked at the maze as sound and through processing using a webcam to feed the output of the screensaver in motion to a second computer running a modified pixelsort script , adding sound from the earlier video and upscaling .

Browser render error and misdirection

Percent percent percent percent dollardollardollar +++++////// This is an earlier video of the win95 maze screensaver sonified using audacity then rerendered using flowblade ( broken files need fixing) the sound is created using a text to speech engine fed with a screen grab image rendered into text using pinxy ( an ancient ascii art maker ) image as text as sound , screensaver as mapped sound as video .

Exploring the extras hidden away on the installation cd ( slitscan of hover )

Language activated desktop .

Partly sunny ( html code and text from MSN via internet explorer 3, turned into speech /chant) video is asciified version of video found on installation disc turned into Dirac then hex-edited).

Video taken from installation disc , chopped into stills using an asciiscript in processing , reassembled as mp4 then chopped into stills and run through glic encoder in processing , finally reassembled and uploaded. Happy Days in an alternate reality .

Where would you like to go today ?

With the way that I work , and by using different codecs and methods of attacking those codecs ( the above is webm , one of my favourites ) similar source material can give widely differing results each codec having its own texture and breaking points . Again the source is a win95 screensaver read to file using an hd webcam on a secondary computer then hex edited and recaptured during playback using a screencapture program - broken files will often not re-encode correctly but will play in say vlc or mpv. The sound is a series of texts ( some system files from win 95 read by a txt to speech engine ) played with in audacity and then layered .

Access denied - wait what just happened there ?